De-biasing the performance review, once and for all.

Ideally, judgments about human capability would be comprehensive and impersonal—the product of standardized, data-driven performance systems. In practice is seldom achieved, as new study (and several others) suggest.

A very interesting and recent academic paper, based on data from about 30,000 employees in a retail chain, shows that women were 14% less likely to be promoted. A major factor behind is gap is that women were consistently mis-judged as having lower management potential than men.

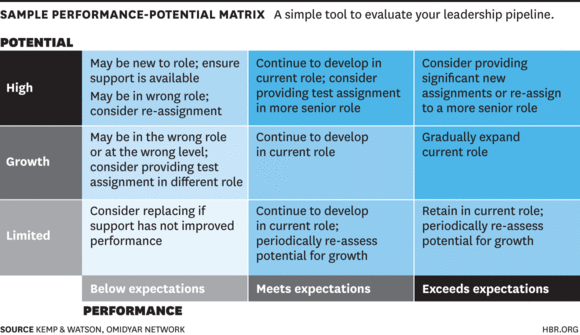

The authors (Alan Benson of the University of Minnesota, Danielle Li of MIT, and Kelly Shue of Yale) collected evaluation and promotion data for management-track employees working at a national retailer from 2011 to 2015. At this company, the performance review process of supervisors placing their team members on a 9-box grid that ranks people on performance and potential. This is a fairly common tool--see table below for an illustration.

Their analysis turned up three important findings:

- Women received lower potential ratings despite earning higher performance ratings. This gap in potential ratings accounts for *up to half* of the overall gender gap in promotions.

- Potential ratings appear to be biased against women. Among workers with the same current performance and potential ratings, women received higher performance ratings in the future.

- Potential ratings, although biased, were still informative about future performance. That is, assessments around an individual's potential is still useful, and therefore shouldn't be discarded. Rather, it should be made more reliable.

On point #3, the authors point to bias-conscious algorithms in screening or anti-bias training programs as potential solutions. They might help, but the jury's still out on whether these can be effective. For instance, here are some thoughtful takes on the limits of:

Bias-conscious algorithms: https://arxiv.org/pdf/2012.00423.pdf

Anti-bias training: https://www.scientificamerican.com/article/the-problem-with-implicit-bias-training/

I reckon the most promising--and most under-rated--way to decontaminate judgments about merit is to make evaluation decisions less dependent on the judgment of a single supervisor, which is (sadly) the standard in most organizations. There are lots of practical alternatives in place at successful organizations like Google, Haier, Nucor, WL Gore, Bridgewater Associates, etc. In these orgs, evaluation decisions are never up to a single boss. Rather, they are (a) collegial, (b) driven by peer or customer input, and (3) are transparent and ongoing (i.e., they're not a yearly exercise).

If you want to dig deeper in these examples, check out the the Meritocracy chapter in Humanocracy. Lazlso block provides a detailed account of Google's approach in his wonderful book, Work Rules! And for a compelling summary of what a peer-based, real-time performance assessment looks like in practice, check out Ray Dalio's Ted Talk about the approach taken by Bridgewater Associates (one of the world's largest and most successful hedge funds):

Ideally, judgments about human capability would be comprehensive and impersonal—the product of standardized, data-driven performance systems. In practice is seldom achieved, as this latest study (and several others) suggest.

Instead of clinging to this bureaucratic ideal, and trying to perfect individual supervisors and decision support tools achieve it, we should instead focus on a new model: one that syndicates the responsibility for making these decisions and relies on the "wisdom of the crowd.”

This path requires a significant shift in how prerogatives and power are distributed in organizations. But if we're truly committed to building workplaces in which people are free to contribute and succeed, irrespective of their credentials, demographics, or identity, this is the path we need to take.